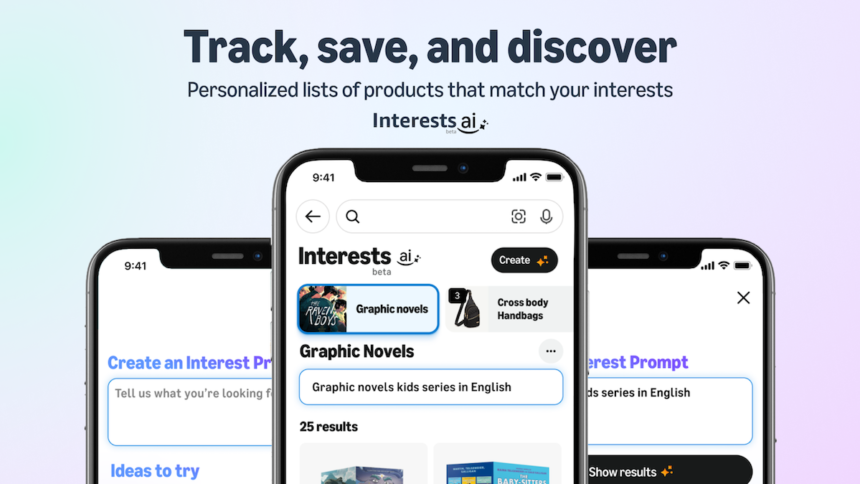

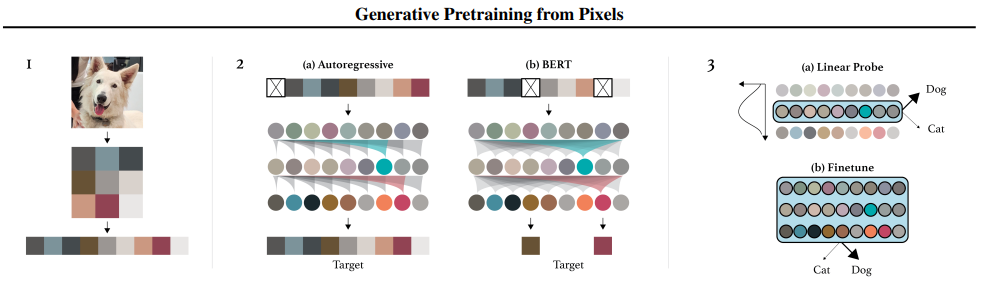

We’ve seen what GPT-style models can do for language. Now, OpenAI has applied that same idea to images. Instead of predicting the next word, this model predicts the next pixel—and it works surprisingly well.

In a project called iGPT (Image GPT), researchers trained a Transformer on sequences of pixels, without any labels, using the same architecture that powers GPT-2. The result? The model not only learns to generate images, but also forms image representations good enough to rival state-of-the-art supervised methods.

No Convolutions, No Labels—Just Pixels

Most image models rely on convolutional layers that understand spatial patterns. iGPT doesn’t. It flattens images into 1D sequences, feeding them into a plain Transformer, treating pixels like tokens.

Even though this ignores the 2D structure of images, iGPT still learns surprisingly rich features—just by trying to predict pixels one by one. The team tested two training objectives:

- Auto-regressive (AR): Predict the next pixel, in order.

- BERT-style (Masked): Predict missing pixels from their surroundings.

The AR objective worked better for learning representations, especially before fine-tuning.

Linear Probes: How Well Did It Learn?

To test how well iGPT understands images, researchers used linear probes. That means freezing the model and training a simple classifier on top. If the model really learned useful features, it should be enough to separate classes like cats and dogs.

The results were impressive:

| Dataset | iGPT Accuracy (Linear Probe) | ResNet-152 Accuracy (Supervised) |

| CIFAR-10 | 96.3% | 94% |

| CIFAR-100 | 82.8% | 78% |

| STL-10 | 97.1% | N/A |

iGPT beat even supervised baselines—without ever seeing labels during pretraining.

Bigger Is Better: Scaling Matters

Just like in language, larger models performed better. iGPT came in three sizes:

- iGPT-S (76M parameters)

- iGPT-M (455M)

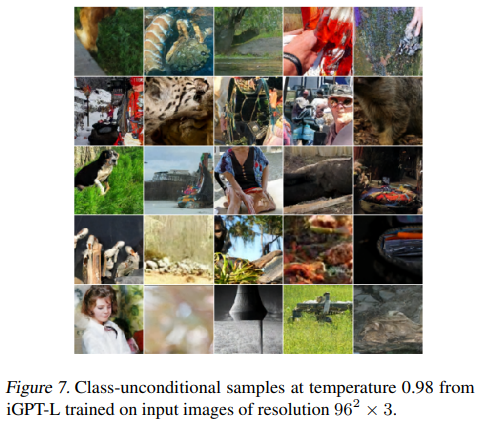

- iGPT-L (1.4B)

The biggest model, iGPT-L, gave the best results—especially when probing middle layers. Interestingly, the most useful features weren’t at the top layer but somewhere in the middle. This suggests that the model first builds a rich understanding of the image, and only then starts predicting fine-grained pixel details.

When Data Is Low, iGPT Shines

In a low-data setting, iGPT still performed well. With just 4 labeled images per class on CIFAR-10, it hit 73.2% accuracy. With 25 images per class, that jumped to 87.6%—all using a simple linear classifier on frozen features.

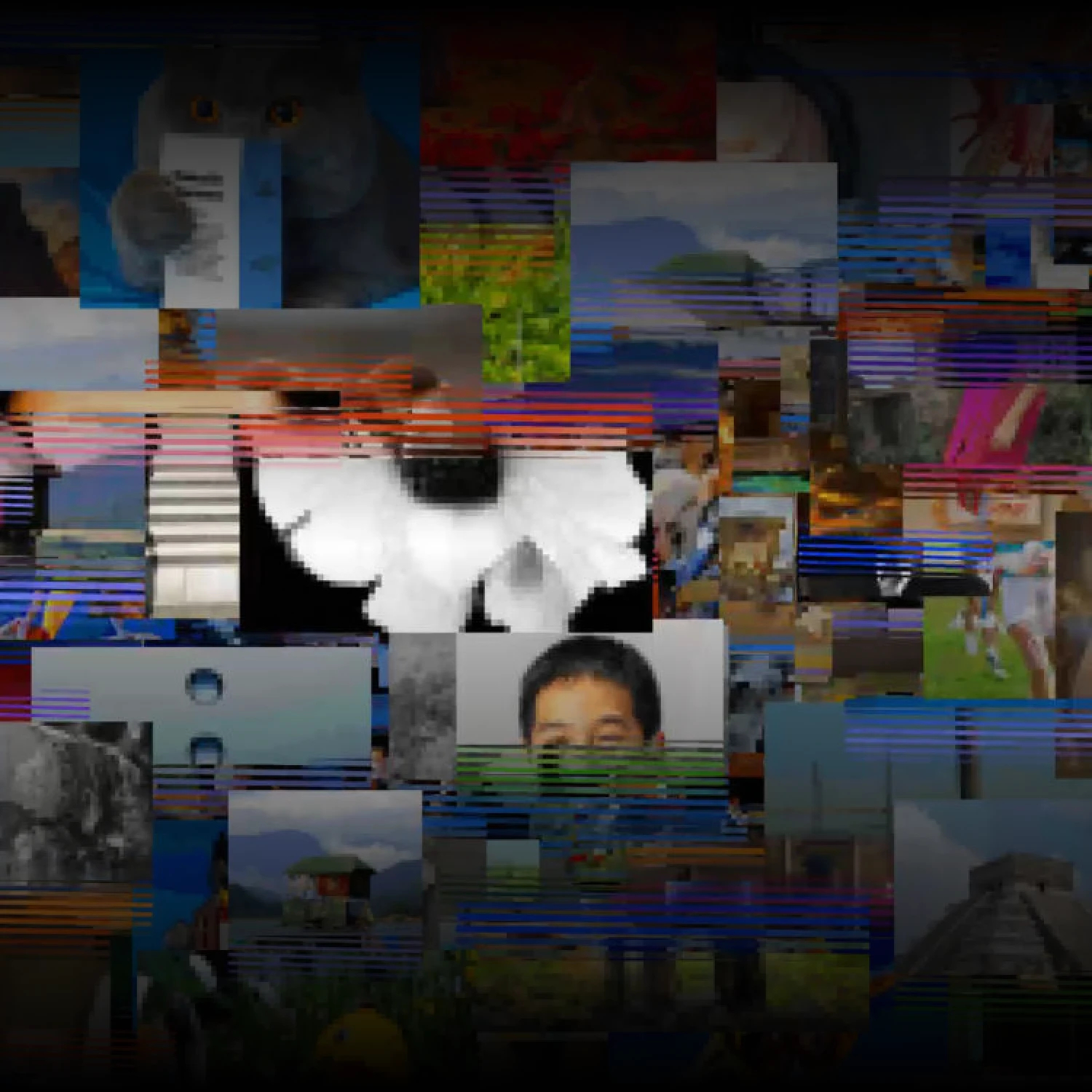

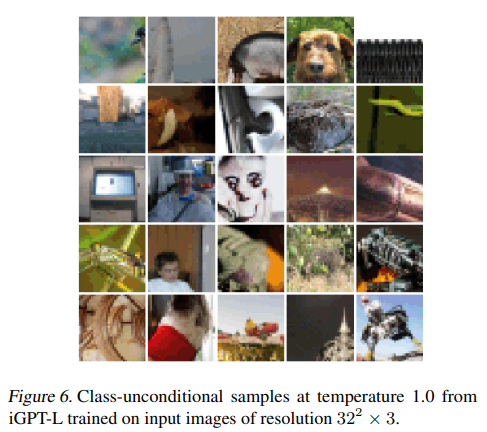

And Yes, It Can Generate Images Too

Because iGPT is trained to predict pixels, it can also create new images—completely from scratch. While sample quality isn’t the main goal, the generated images look surprisingly coherent given the low resolution and lack of label conditioning.

What About BERT for Images?

The team also tried a BERT-style masking objective. While it underperformed the AR model on linear probes, it nearly caught up after full fine-tuning. So yes, BERT can learn from pixels too—but AR seems better at building strong image features right out of the box.

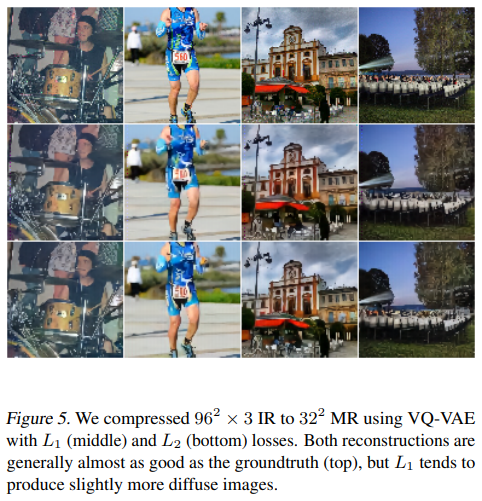

Pushing Beyond Pixels: Compressing Context with VQ-VAE

High-resolution images are hard for Transformers, because attention scales poorly with input length. To fix that, OpenAI used a method called VQ-VAE, which compresses images into a sequence of discrete codes.

This trick allowed iGPT to work with 192×192 images while keeping the context length manageable. And it worked: using features from compressed representations, iGPT hit 69% accuracy on ImageNet linear probes—matching contrastive learning methods like MoCo and AMDIM.

What This Means for AI

The takeaway? Transformers trained to predict pixels—without labels or convolutions—can learn powerful, general-purpose image features. This suggests a future where the same model type could handle language, images, audio, and video, all with the same architecture and training methods.

There are still challenges: training is expensive, high-res inputs are tricky, and large models are needed. But iGPT shows that generative pretraining, once a language-only strategy, has serious potential in vision too.